Artificial Intelligence (AI) and deep learning having the revolutionized in multiple fields, initialized also for healthcare and finance to autopilot vehicles and natural language processing. Among the numerous deep learning frameworks available now, PyTorch stands out due to its easy of use, in flexibility, and best power community support. Under this blog post, we will explore PyTorch in details, covering the features, pros, cons, example and how to make AI models efficiently using this important framework.

Table of Contents

What is PyTorch?

PyTorch is known for an open-source machine learning framework built by Facebook’s AI Research Lab (FAIR). It’s extensively used for deep learning applications due to its dynamic calculation graph, user-friendly API, and flawless integration with Python. PyTorch is developed on the Torch library and provides the tensor calculation with GPU acceleration, used to making it an excellent choice for AI research and development.

Can Also read: TensorFlow Unleashed: A Deep Dive into the AI Powerhouse of 2025

Key Features of PyTorch

1. Dynamic Computation Graph

Unlikely the TensorFlow’s static graph approach, PyTorch uses a dynamic calculation graph, meaning that calculations are defined at runtime. This makes debugging easier and allows for further intuitive model development.

2. GPU Acceleration

PyTorch seamlessly used to supports GPU acceleration, enabling briskly calculations and effective model training.

3. Easy-to-Use API

PyTorch provides a simple and Pythonic API that uses for make structure deep learning models straightforward. Experimenters and inventors find it more intuitive than numerous other frameworks.

4. Strong Community Support

PyTorch consist an active and growing community, furnishing extensive documentation, tutorials, and third-party libraries for enhanced functionality.

5. Integration with Other Libraries

PyTorch also used to integrates well with NumPy, SciPy, and other Python libraries, allowing for smooth development workflows enhancement.

What is PyTorch Used For?

PyTorch is extensively used across various AI and ML applications, including but not limited for:

1. Computer Vision

- Image bracket, object discovery, and segmentation.

- Medical image analysis and anomaly discovery.

2. Natural Language Processing (NLP)

- Sentiment analysis, machine restatement, and chatbots.

- Text summarization and speech-to-textbook models.

3. Generative AI

- GAN (Generative inimical Network) for image conflation.

- Style transfer and deepfake software.

4. Reinforcement Learning

- Used for training the AI agents for robotics and gaming application.

5. Scientific Computing & Research

- Simulations and numerical calculations.

- Prototyping and rapid-fire testing of deep learning models.

Pros and Cons of PyTorch

Pros:

- Ease of Use: PyTorch’s Pythonic nature makes it intuitive for app builder and experimenters.

- Dynamic Computation Graph: Used to allows for easy debugging and revision of neural networks.

- Strong Community Support: The framework backed by Meta (formerly Facebook) and extensively used in academia and industries.

- Seamless GPU Acceleration: Fluently switches between CPU and GPU with minimum code changes.

- Flexible Deployment: PyTorch provides deployment options via TorchScript, ONNX, and PyTorch Lightning.

Cons:

- Higher Memory Consumption: Compared to TensorFlow, PyTorch might be more memory-ferocious.

- Less Mature for Production: While perfecting, TensorFlow has historically been widely used to preferred for large-scale deployments.

- Limited Support for Mobile & Edge AI: While, PyTorch Mobile is perfecting, TensorFlow Lite is still uses the more optimized for mobile software.

Installing PyTorch

Before using the PyTorch, you need to install it the frameworks. You can install PyTorch with using pip with running the following command:

bash CODE

pip install torch torchvision torchaudioAlternately, you can visit the official page for PyTorch website (https://pytorch.org/) to find installation instructions acclimatized to your system.

Understanding Tensors in PyTorch

Tensors are the fundamental structure blocks in PyTorch. They’re analogous to NumPy arrays but give fresh capabilities similar as GPU acceleration.

1. Creating Tensors

Python CODE

import torch

# Creating a tensor

x = torch.tensor([1, 2, 3])

print(x)2. Performing Operations on Tensors

Python CODE

y = torch.tensor([4, 5, 6])

result = x + y

print(result)3. Converting Between NumPy and PyTorch Tensors

Python CODE

import numpy as np

# Convert NumPy array to PyTorch tensor

np_array = np.array([1, 2, 3])

tensor = torch.from_numpy(np_array)

# Convert PyTorch tensor to NumPy array

np_array_back = tensor.numpy()Building a Simple Neural Network with PyTorch

Now, let’s make a simple neural network using PyTorch’s torch.nn module. We’ll produce a introductory feedforward neural network for classifying handwritten integers using the MNIST dataset.

Step 1: Import Required Libraries

Python CODE

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transformsStep 2: Load the MNIST Dataset

Python CODE

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))])

trainset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=32, shuffle=True)Step 3: Define the Neural Network Model

Python CODE

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(28*28, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 28*28)

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return xStep 4: Train the Model

Python CODE

model = SimpleNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

for epoch in range(5):

for images, labels in trainloader:

optimizer.zero_grad()

output = model(images)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

print(f'Epoch {epoch+1}, Loss: {loss.item()}')Example Steps Code Combined Results

Python CODE

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

# Load dataset

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))])

train_dataset = datasets.MNIST(root='./data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=64, shuffle=True)

# Define the neural network class

class NeuralNet(nn.Module):

def __init__(self):

super(NeuralNet, self).__init__()

self.fc1 = nn.Linear(28 * 28, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = x.view(-1, 28*28)

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

# Initialize model, loss function, and optimizer

model = NeuralNet()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Training loop

for epoch in range(5):

for images, labels in train_loader:

optimizer.zero_grad()

output = model(images)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

print(f'Epoch {epoch+1}, Loss: {loss.item():.4f}')Advanced PyTorch Concepts

1. Transfer Learning

The transfer learning used to allows you to use pre-trained models and fine-tune them for your specific tasks responsibility. PyTorch provides numerous pre-trained models through the torchvision.models module.

Python CODE

from torchvision import models

# Load a pre-trained ResNet model

resnet = models.resnet18(pretrained=True)2. Autograd and Backpropagation

PyTorch’s autograd module automatically computes the slants, and making backpropagation easier.

Python CODE

x = torch.tensor(2.0, requires_grad=True)

y = x ** 2

y.backward()

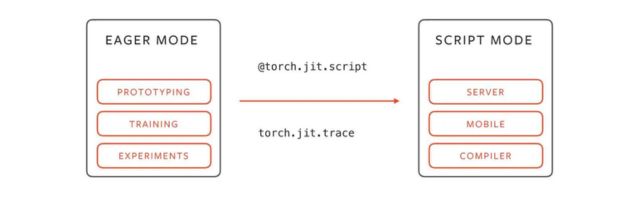

print(x.grad) # Output: 4.03. Deployment with TorchScript

PyTorch models might be converted to TorchScript for optimized deployment on different platforms.

Python CODE

scripted_model = torch.jit.script(model)

torch.jit.save(scripted_model, "model.pth")Comparing PyTorch with TensorFlow

| Feature | PyTorch | TensorFlow |

|---|---|---|

| Dynamic Computation Graph | Yes | No (uses static graph) |

| Debugging | Easier | More challenging |

| Ease of Use | More Pythonic | More complex syntax |

| Deployment | TorchScript | TensorFlow Serving & TF Lite |

| Community Support | Strong | Very strong |

Conclusion

PyTorch is an excellent choice for making the AI models due to its in flexibility, ease of use, including strong community support. Whether you’re a fresher or an experienced AI researcher, PyTorch used to provides a robust framework for deep learning software. Begin to exploring PyTorch now and influence its important features to produce cutting-edge AI results.

Still, PyTorch is an excellent choice, If you are looking to make AI models with ease. Start experimenting moment and unlock the eventuality of deep learning.

PyTorch FAQs

Is PyTorch better than TensorFlow?

It depends over the use case. PyTorch is much user-friendly and used to preferred for exploration, while TensorFlow is frequently used for large- scale product.

Can PyTorch run on GPUs?

Yeah, PyTorch widely used to supports the GPU acceleration, by building it suitable for deep learning tasks.

Is PyTorch free?

Yeah, PyTorch is an open-source framework, that the reason is available for free.

How does PyTorch compare with Keras?

Keras (Used on top of TensorFlow) is further beginner-friendly, whereas PyTorch offers better flexibility for custom AI models.

Is PyTorch good for beginners?

Yeah, especially for who is more familiar with Python, as its syntax is intuitive and ease to learn.